The David vs Goliath Construction SEO Battle

I expected mega contractors to dominate SEO. Bigger budgets should mean better digital performance, right?Not quite.My recent research, which compared 100 mid-sized construction contractors (≈$100…

Construction SEO Benchmarks

It all started with this question during one of AltCMO’s weekly Innovation Meetings: “Do the ‘big guys’ in Construction care about SEO?” If you ask most people in Construction, they’ll tell you the industry is built on word-of-mouth, relationships, and low bids. A construction company’s website is usually a secondary source for business development and […]

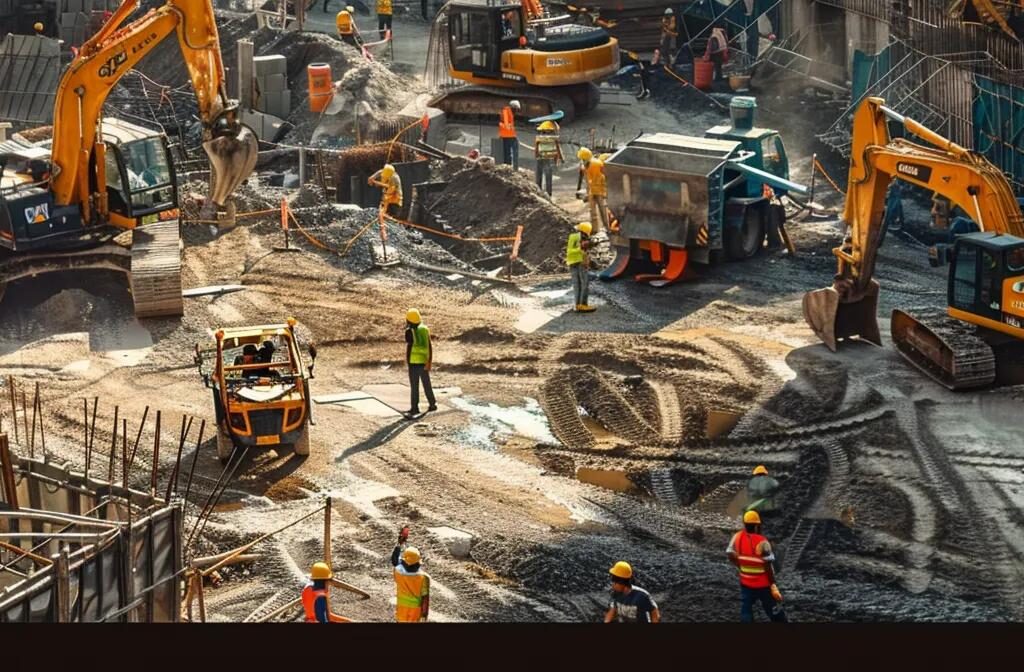

SEO Best Practices for Construction Company Websites

Achieving SEO success is vital for construction sites. Implement best practices to enhance visibility, drive traffic, and boost your business’s growth.

Optimize Your Construction Website With Effective on-Page SEO Strategies

Master on-page SEO essentials for construction websites to enhance visibility and attract more clients. Optimize strategies now for better online performance.

Boost Your Construction Site’s Online Visibility With Effective SEO Strategies

Boost online visibility for construction sites with expert tips. Improve your online presence, attract more clients, and enhance project awareness effectively.

Maximizing SEO Through Effective Mobile Optimization Strategies for Construction Company Sites

Maximize SEO impact by focusing on mobile optimization for construction companies. Enhance user experience and increase traffic with efficient strategies today.

Maximize Your Construction Site With Effective SEO Strategies

Unlock effective SEO strategies to enhance construction websites. Learn how to optimize visibility and attract more clients with proven techniques.

Effective Local SEO Strategies to Boost Your Construction Company on Google Maps

Boost a construction company’s visibility on Google Maps with effective local SEO strategies. Enhance your online presence and attract more clients today.

Boost Your Construction Site With Effective SEO Strategies

In the competitive landscape of the construction industry, many businesses struggle to stand out online. A recent study shows that 75% of users never scroll past the first page of search results, highlighting the need for effective SEO strategies. This article will explore advanced techniques tailored for construction marketing consultants, including keyword optimization, website […]

Why It’s Important to Identify and Document Website Crawl Errors

A website’s functionality and user experience can make or break first impressions Crawl errors—such as 404 errors, broken links, and redirect chains—are among the most common culprits that degrade a website’s performance. Addressing these issues isn’t just a matter of technical housekeeping; it’s a vital aspect of maintaining your site’s visibility, usability, and overall effectiveness. […]